Concepts in LLM Security

1. Adversarial Robustness

LLMs are vulnerable to prompt injection, jailbreaks, and adversarial attacks.

- Main Works / Research:

- Prompt Injection Attacks (Greshake et al., 2023): Showed how malicious instructions embedded in prompts or data can override model behavior.

- Universal Adversarial Triggers: Small changes in text that cause misclassification or unsafe outputs.

- Techniques:

- Adversarial training: Expose the model to adversarial inputs during training.

- Input sanitization & filtering: Detect and strip malicious instructions.

- Defense-as-a-Service: External layers that evaluate prompt safety before sending to the LLM (e.g., Guardrails, Rebuff).

2. Content Safety & Guardrails

LLMs can generate harmful, biased, or unsafe content.

- Main Tools / Research:

- OpenAI Moderation API: Filters for categories like hate speech, self-harm, violence.

- NVIDIA NeMo Guardrails: Defines “rails” (policies, topics, safety constraints) for conversation flow.

- Anthropic’s Constitutional AI: Aligns models to follow a written “constitution” of safety rules instead of only relying on human feedback.

- Techniques:

- Post-processing filters on LLM output.

- Rule-based or ML classifiers for harmful content detection.

- Fine-tuning with Reinforcement Learning from Human Feedback (RLHF) or AI Feedback (RLAIF).

3. Privacy & Data Security

Models can memorize and leak training data.

- Main Works / Research:

- Carlini et al. (2021, 2022): Demonstrated extraction of training data from LLMs via model inversion.

- Membership inference attacks: Detect if specific data points were in training.

- Techniques:

- Differential Privacy (DP) training: Adds noise during training to prevent memorization.

- Red-teaming for data leakage: Testing models with targeted extraction queries.

- Data minimization and filtering: Scrubbing sensitive info before training.

4. Misuse Prevention (Model Safeguards)

Stopping malicious uses like malware generation or misinformation.

- Main Tools / Approaches:

- Model Cards & Usage Policies (Mitchell et al., 2019): Documentation of limitations and allowed uses.

- API-level safeguards: Restrict capabilities like code execution or system commands.

- Access control & rate limiting: Prevent large-scale abuse (e.g., botnet creation).

- Techniques:

- Red-teaming models against misuse scenarios.

- Restricting high-risk capabilities (e.g., chemistry, bioterror content).

5. Alignment & Human Values

Ensuring models behave consistently with human values.

- Main Works / Research:

- RLHF (Christiano et al., 2017; Ouyang et al., 2022): Train with human preferences to improve helpfulness and harmlessness.

- Anthropic’s Constitutional AI: Use high-level written principles instead of direct human labeling.

- Techniques:

- Human feedback collection for fine-tuning.

- Self-refinement (model critiques and improves its own output).

- Scaling laws for alignment: More parameters/data do not automatically improve alignment.

6. Explainability & Interpretability

Understanding why models make certain outputs.

- Main Works / Tools:

- Attention visualization: Heatmaps of attention weights in transformers.

- Feature attribution (Integrated Gradients, SHAP, LIME).

- Mechanistic interpretability (Anthropic, OpenAI): Studying circuits/neurons in LLMs.

- Techniques:

- Interpretability audits before deployment.

- Identifying “steering vectors” (features that control toxicity, bias, etc.).

7. Evaluation & Benchmarking

Quantifying safety and robustness.

- Main Tools / Benchmarks:

- HELM (Holistic Evaluation of Language Models) by Stanford: Covers accuracy, robustness, calibration, fairness, efficiency.

- BIG-bench, TruthfulQA, HaluEval: Evaluate hallucinations, truthfulness, adversarial robustness.

- Red-teaming frameworks (Anthropic, OpenAI): Structured testing against safety challenges.

8. Deployment Security (Ops & Infra)

LLM security is not just about the model — also about the serving infrastructure.

- Risks: Prompt injection leading to API misuse, prompt chaining attacks, data poisoning.

- Defenses:

- Sandboxing model outputs (e.g., code execution in secure containers).

- Rate-limiting and anomaly detection in API use.

- Model access monitoring (logging, auditing queries).

Summary

The security & safety of LLMs is an active, multi-layered field:

- Adversarial robustness → defend against prompt injections, jailbreaks.

- Content safety → guardrails, moderation filters, Constitutional AI.

- Privacy → differential privacy, preventing data leakage.

- Misuse prevention → usage policies, access control, red-teaming.

- Alignment → RLHF, RLAIF, constitutions, value alignment.

- Interpretability → mechanistic analysis, feature attribution.

- Evaluation → HELM, TruthfulQA, adversarial benchmarks.

- Operational safeguards → API monitoring, sandboxing, rate limiting.

Pretrained Model

- A model that has already been trained on a dataset (often large and general-purpose).

- Example: ResNet50 pretrained on ImageNet, BERT pretrained on Wikipedia + BooksCorpus, GPT models pretrained on web data.

- You can use it as-is (for inference) or fine-tune it for a downstream task.

Base Model

- Usually refers to the original, general-purpose pretrained model before fine-tuning or adaptation.

- It’s the “foundation” model from which variants are derived.

- Example:

- BERT-base (the base pretrained BERT, before fine-tuning for QA or classification).

- GPT-3 base model vs. GPT-3 fine-tuned for code (Codex).

- In vision, a ResNet base model is the pretrained backbone before adding custom classification heads.

Some risk of adopting LLMs

- Operationalization: Increasing Legal Liability

- Cyber: Introducing new vulnerabilities

- Explainability: Answers not justified

- Ethics: violation of society expectations

- Reputation: Damaging brand, public disgrace

- Social Implications: Spreading misinformation

- Accuracy: Asserting incorrect information as fact

- Bias: prejudicial or preferential propostions

- Data integrity: Untrustworthy source of data

- Behavioral:

Tricking and manipulating people

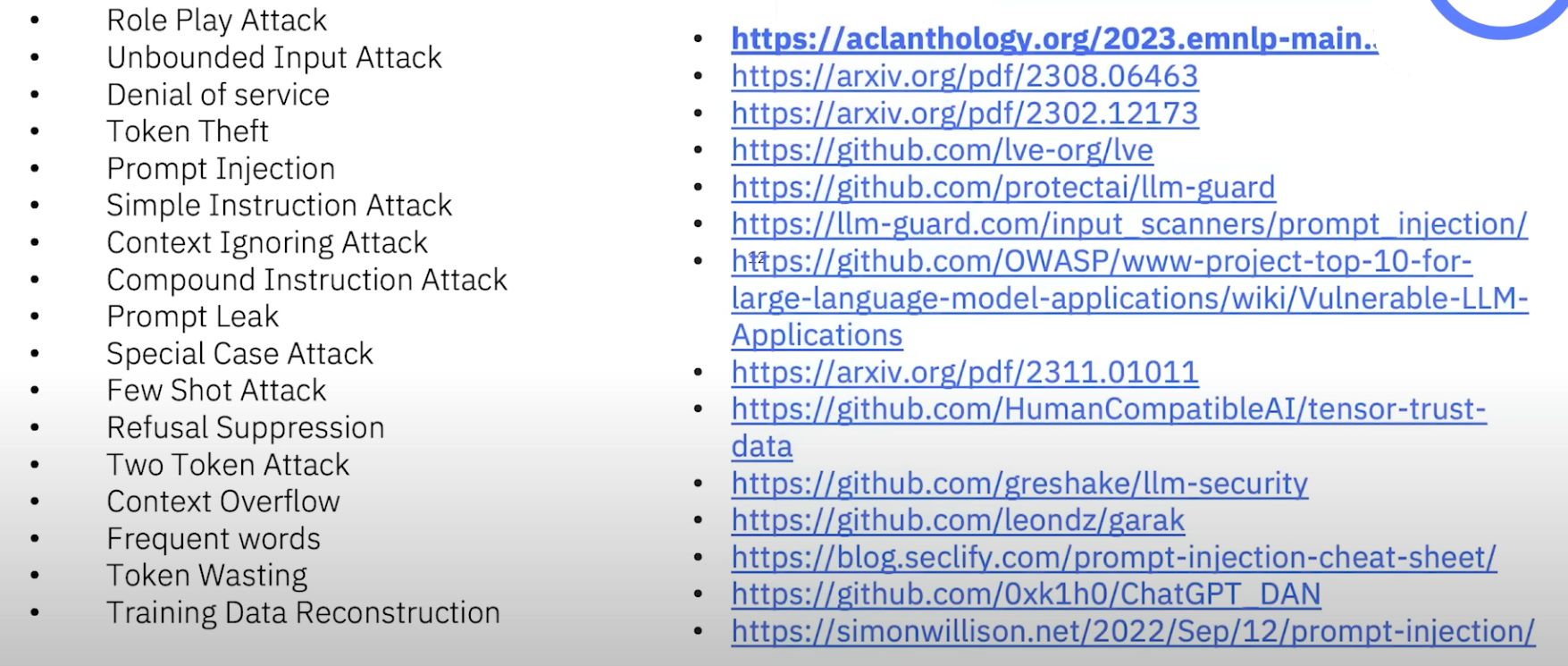

Attacks on LLMs